Tutorials

Walkthroughs, demonstrations, and other projects.

This article will help you have another point of view with the Visitor Pattern by thinking it with the Double-Dispatch Approach. This article will also show you a practical use case of how this pattern is applied in .NET.

Let's execute some middleware in our ASP.NET 6 app only under certain conditions, using AppSettings or the request URL and body.

Let's complete the DapperWhereClauseBuilder from the previous issue! Plus: .NET 7 Preview 2, code reviews, gotchas, HTML injection, and more!

Let's see how the order of the middleware in the ASP.NET 6 pipeline affects how they function.

Let's build custom ASP.NET 6 Middleware classes, including a logger and a simple response middleware.

Let's see what Middleware is in ASP.NET 6, how it forms pipelines, how the Program.cs file is involved, and a simple implementation.

Let's create a class that can generate parameterized SQL for INSERT and UPDATE statements using Dapper!

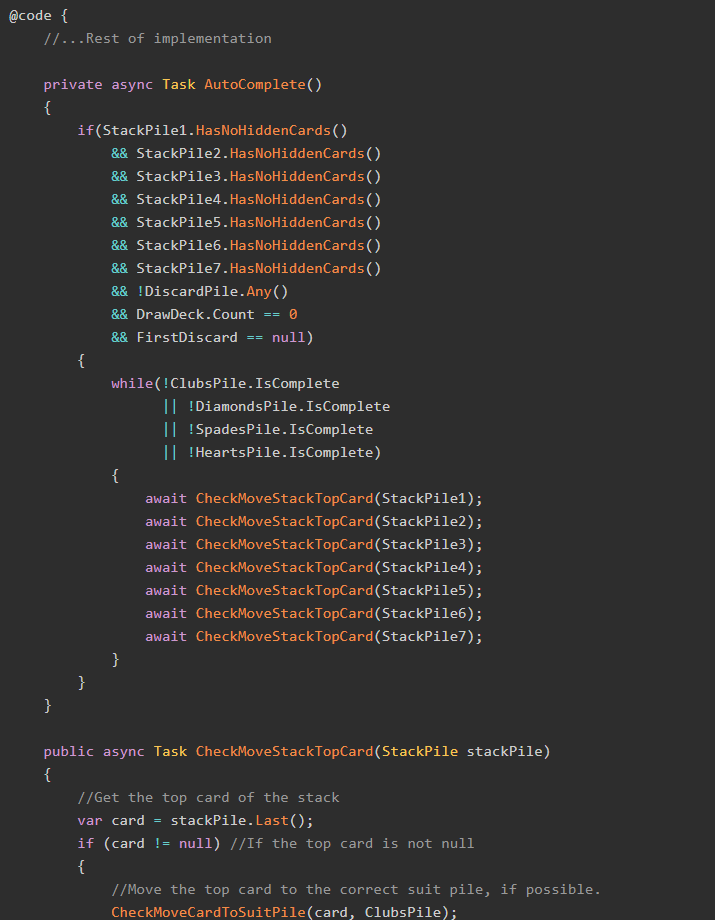

Let's finish our Solitaire in Blazor and C# implementation by adding two nice-to-have features!

C#, .NET, Web Tech, The Catch Block, Blazor, MVC, and more!

You've successfully subscribed to Exception Not Found

Welcome back! You've successfully signed in.

Great! You've successfully signed up.

Your link has expired

Success! Your account is fully activated, you now have access to all content.